- The recently started BELSPO-funded INSIGHT project (Intelligent Neural Systems as Integrated Heritage Tools) organizes a launch event on 9 November 2017. This event will take the form of an afternoon of plenaries by internationally recognized speakers on topics relating to Artificial Intelligence, Heritage data and Digital Art history. This afternoon will take place at the Musical Instruments Museum in Brussels (Hofbergstraat 2, Brussels). Afterwards you are cordially invited to a reception. Registration is free but participants are invited to register through sending an email to mike.kestemont@uantwerp.be. The presentation of the event will be in the hands of Bart Magnus (PACKED).

Schedule

Welcome (Mike Kestemont) [slides]

| 13:00-13:45 |

Seth van Hooland (Université Libre de Bruxelles): Understanding the perils of Linked Data through the history of data modeling |

| 13:45-14:30 |

Benoit Seguin (École Polytechnique fédérale de Lausanne): The Replica Project: Navigating Iconographic Collections at Scale |

| 14:30-15:15 |

Roxanne Wyns (KULeuven): International Image Interoperability Framework (IIIF). Sharing high-resolution images across institutional boundaries |

| 15:15-15:45 |

Break |

| 15:45-16:30 |

Saskia Scheltjens (Rijksmuseum Amsterdam): Open Rijksmuseum Data: challenges and opportunities |

| 16:30-17:15 |

Nanne van Noord (Universiteit Tilburg): Learning visual representations of style |

| 17:15-18:30 |

Reception |

Understanding the perils of Linked Data through the history of data modeling

Seth van Hooland

Based on concrete examples from the cultural heritage world, this talk illustrates how different data models were developed, each one offering its own possibilities and limits. Putting RDF in this larger context helps to develop a more critical understanding of Linked Data.

Seth van Hooland studies document and records management strategies and enjoys cleaning up dirty metadata. He is the deputy head of the Information and Communication Science department and responsible for the Master in Information Science at ULB.

The Replica Project : Navigating Iconographic Collections at Scale

Benoit Seguin

In recent years, museums and institutions have pushed fBenoit Seguin

or a global digitization effort of their collections. Millions of artefacts (paintings, engravings, sketches, old photographs, …) are now in digital photographic format. Furthermore, through the IIIF standards, a significant portion of these images are now available online in an easily accessible manner. On the other side of the spectrum, the recent advances in Machine Learning are allowing computers to tackle more and more complex visual tasks.

The combination of more data and better technology opens new opportunities for Art Historians to navigate these collections. While most of the time search queries are solely based on textual information (metadata, tags, …), we focus on visual similarities i.e working directly with the images.

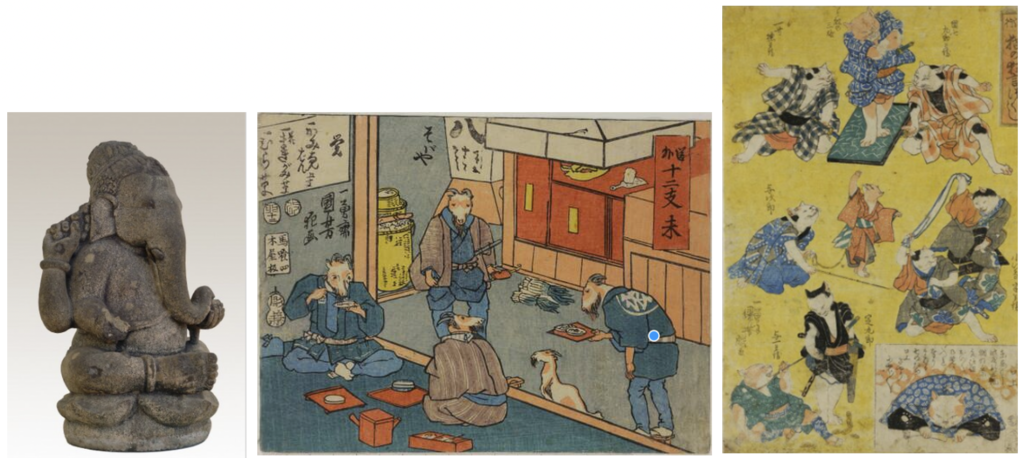

I will quickly report on our digitization effort with the processing of the fototeca of the Foundazione Giorgio Cini, where more than 300’000 images are already digitized. Then I will explain how we worked with Art Historians to learn a specific image similarity function by leveraging the concept of Visual Links between images. Finally, I will present our work on the user interfaces leveraging such a metric, allowing new ways to explore large collections of images, and how can users further refine the tool.

Benoit Seguin is a PhD Candidate at the Digital Humanities Laboratory of EPFL. Prior to starting his PhD, Benoit got his Diplôme d’Ingénieur at Ecole Polytechnique ParisTech and a MSc in Computer Science from EPFL. Before applying Image Processing and Computer Vision to Digital Humanities, he was using similar techniques in Circuit Manufacturing, Robotics, and Medical Imaging. The main topic of his PhD is to use Machine Learning to explore the next generation of tools for Digital Art History.

Open Rijksmuseum Data : challenges and opportunities

Saskia Scheltjens [slides]

The Rijksmuseum, the National Museum of Art and History of the Netherlands, has been on an open data journey for quite some time now. From opening up a limited collection of images and collection data with a CCBY license in 2011 to full blown sharing of all of its high resolution collection images and metadata with a CC0 license in 2013, the Rijksmuseum is hailed as one of the champions of the open content movement in the international museum world. What was the reasoning behind this bold move? And does that reasoning still holds up today? Precisely what kind of content do we speak of, and what is the impact of the size of the collection and the datasets that are involved. This presentation will highlight some of the decisions that were made in the past, and try to sketch some of the current challenges and new developments the museum is working on.

Saskia Scheltjens (1970) studied Dutch and English Literature and Linguistics, and Information and Library Science at the University of Antwerp (Belgium). She has worked as Head of the Library at the Museum of Contemporary Art in Ostend, and was responsible for a very large reorganisation and the formation of a new Faculty Library of Arts & Philosophy at Ghent University. In 2016 she was asked to set up a new department called Research Services at the Rijksmuseum in Amsterdam. Together with her team, she is responsible for the collection information and data, be it analog or digital, be it the metadata of object collections, documentation, the museum library, object archives and research data. Saskia is fascinated by the interdisciplinary possibilities of digital humanities research and a strong advocate for open data within the digital heritage world.

Learning visual representations of style

An artist’s style is reflected in their artworks, independent from what is depicted. Two artworks created by the same artist that depict two vastly different scenes (e.g., a beach scene and a forest scene) both reflect their style. Stylistic characteristics of an artwork can be used by experts, and sometimes even laymen, to identify the artist that created the artwork. The ability to recognize styles and relate these to artists is associated with connoisseurship. Connoisseurship is essential in the tasks of authentication and restoration of artworks, because both tasks require detailed knowledge of stylistic characteristics of the artist. In this talk I will cover a number of studies we performed aimed at realising connoisseurship in a computer, for both recognition and production tasks.

Nanne van Noord is a Postdoc working on the SEMIA (The Sensory Moving Image Archive) project at the Informatics Institute, University of Amsterdam. Previously he was a PhD candidate working on the REVIGO (REassessing VIncent van GOgh) project at Tilburg University. His research interests include deep learning, image processing, and digital cultural heritage.

International Image Interoperability Framework (IIIF). Sharing high-resolution images across institutional boundaries

Roxanne Wyns [slides]

IIIF or the International Image Interoperability Framework is a community-developed framework for sharing high-resolution images in an efficient and standardized way across institutional boundaries. Using an IIIF manifest URL, a researcher can simply pull the images and related contextual information such as the structure of a complex object or document, metadata and rights information into any IIIF compliant viewer such as the Mirador viewer. Simply put, a researcher can access a digital resource from the British Library and from the KU Leuven Libraries in a single viewer for research, while allowing the institutions to exert control over the quality and context of the resources offered. KU Leuven implemented IIIF in 2015 in the framework of the idemdatabase.org project and has since been using it in a number of Digital Humanities projects with a focus on high-resolution image databases for research. By now the IIIF community has grown considerably with institutions such as the ‘The J. Paul Getty Trust’ and the ’Bibliotheca Vaticana’ implementing it as a standard and providing swift and standardized access to thousands of resources. Its potential has however not reached its limits with ongoing work on aspects such IIIF resource discovery and harvesting of manifest URLs, annotation functions and an extension to include audio-visual material. The presentation will introduce IIIF and its concepts, highlight projects and viewers, and give an in-depth view of its current and future application options.

Roxanne Wyns studied History of Art and Archaeology at the Free University of Brussels (VUB). Since 2009 she worked on several European projects, specialising in standards, multilingual thesaurus management, and data interoperability and aggregation processes. At LIBIS – KU Leuven she supports KU Leuven and its partners in realizing their digital strategy. She is involved in several research infrastructure projects and supports the ‘Services for researchers’ in the framework of Research Data Management (RDM). Roxanne is actively involved in the IIIF community and is a member of the Dariah-EU Scientific Advisory Board.